Tasks

What is Kubernetes? Write in your own words and why do we call it k8s?

Kubernetes, often abbreviated as k8s, is a sophisticated container orchestration platform that manages the deployment, scaling, and operation of application containers across clusters of hosts. It provides several advantages over Docker, a container platform like single host management, auto-scaling, auto-healing and Enterprise Level Support. In Kubernetes, a pod serves as the smallest deployable unit, just like a container is the basic unit in Docker.

The term "k8s" is derived from "Kubernetes," where the number "8" represents the eight letters between "K" and "s." This abbreviated form is commonly used due to the convenience it offers in writing and speaking about Kubernetes.

What are the benefits of using k8s?

Following are the benefits of using K8S -

Single Host Management: Unlike Docker, which operates on a single host, Kubernetes efficiently manages multiple containers across a cluster of hosts. This prevents resource contention issues wherein one container's resource usage affects others. Kubernetes employs mechanisms to handle resource allocation dynamically, such as reallocating resources or putting resource-intensive containers into a sleep mode.

Auto Scaling: Kubernetes offers an auto-scaling feature that dynamically adjusts the number of running containers based on workload demands. This eliminates the need for manual intervention during sudden spikes in traffic or workload. In contrast, Docker requires manual scaling adjustments, making it less flexible in dynamic environments.

Auto Healing: Kubernetes monitors the health of containers and automatically restarts or replaces failed containers. This auto-healing capability ensures that applications remain available and responsive even in the event of container failures. Docker lacks this automated recovery feature, requiring manual intervention to restart failed containers.

Enterprise-Level Support: Kubernetes provides comprehensive support for enterprise-grade features, including load balancing, firewall enforcement, auto-scaling, auto-healing, and API gateway management. These features are essential for ensuring high availability, security, and scalability in production environments. While Docker lacks native support for enterprise-level standards, Kubernetes integrates these functionalities seamlessly, making it a preferred choice for enterprise deployments.

Explain the architecture of Kubernetes.

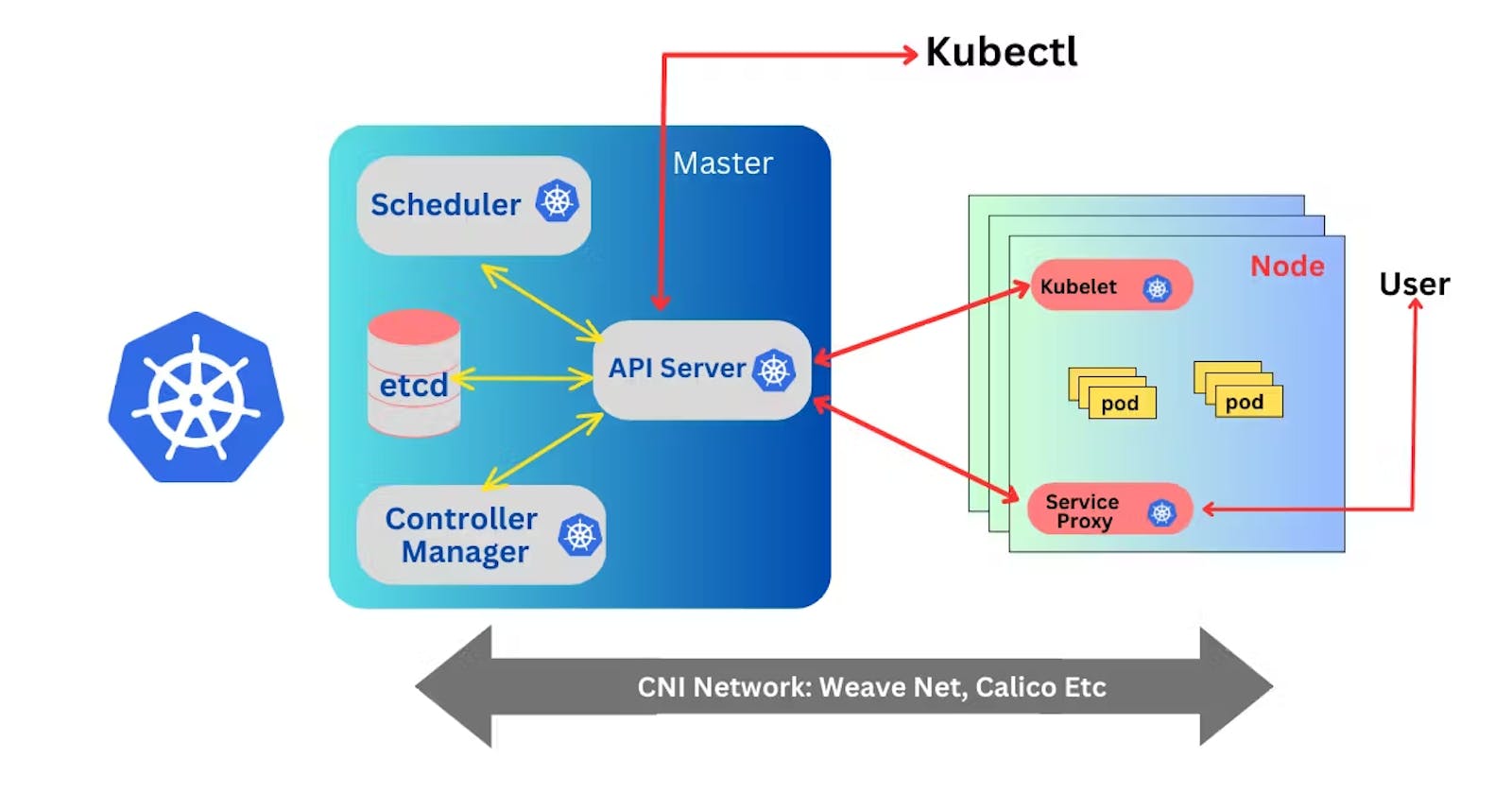

Kubernetes architecture comprises multiple nodes, including a master node and one or more worker nodes. The master node serves as the control plane, managing the cluster's state and orchestrating operations, while the worker nodes, also known as the data plane, execute the tasks assigned by the control plane.

Master Node (Control Plane):

API Server: Acts as the primary management hub, accepting requests from users and components, and serving the Kubernetes API.

Scheduler: Assigns pods to nodes based on resource availability, policies, and workload requirements.

Controller Manager: Monitors the cluster's state and ensures that the desired state is maintained. It includes various controllers responsible for managing replication, endpoints, and other resources.

etcd: Consistent and highly available key-value store that stores all cluster data, including configuration details and the current state of the cluster.

Worker Node (Data Plane):

Kubelet: Responsible for managing the containers and pods running on the node. It interacts with the control plane to ensure pods are running as expected.

Kube-proxy: Provides network proxy and load balancing services to enable communication between pods and external clients.

Container Runtime: Executes container images within pods. Kubernetes supports various container runtimes, including Docker, containerd, and cri-o.

Additional Point:

- kubectl: While not a component of the Kubernetes architecture, kubectl is a command-line tool used by administrators and developers to interact with the Kubernetes API server.

Overall, Kubernetes architecture is designed to provide a scalable, resilient, and self-healing platform for deploying and managing containerized applications across distributed environments. Each component plays a critical role in ensuring the cluster's stability, efficiency, and security.

What is Control Plane?

The control plane is a fundamental component of Kubernetes responsible for maintaining the desired state of the cluster. It consists of several interconnected components, including the API server, scheduler, controller manager, and etcd. The control plane coordinates and manages various actions across the cluster, such as scheduling pods, scaling applications, and maintaining high availability. Essentially, it serves as the brain of the Kubernetes cluster, orchestrating the activities of worker nodes to ensure the smooth operation of containerized workloads.

Write the difference between kubectl and kubelet.

Kubectl:

Command-line tool for interacting with Kubernetes API server.

Used for deploying, managing, and troubleshooting applications and resources within the cluster.

Kubelet:

Component running on worker nodes.

Responsible for managing containers and pods on the node based on instructions received from the control plane.

Handles tasks such as pulling container images, starting and stopping containers, and reporting node status.

Explain the role of the API server.

The API server is a critical component of the Kubernetes control plane, responsible for managing cluster state, serving the Kubernetes API, and enforcing security policies to ensure the integrity and reliability of the cluster. Its responsibilities include:

Accepting and Validating Requests: The API server receives requests from various sources, including users via kubectl, controllers, and other Kubernetes components. It validates these requests to ensure they comply with the cluster's configuration and security policies.

Maintaining the Cluster State: The API server acts as the single source of truth for the entire cluster. It stores and manages the persistent state of Kubernetes objects, such as pods, services, deployments, and replica sets, in the cluster's distributed key-value store (etcd).

Serving RESTful API Endpoints: The API server exposes a RESTful interface that clients can use to interact with the Kubernetes cluster. This interface allows users to perform operations like deploying applications, scaling resources, querying cluster status, and managing configuration.

Authentication and Authorization: The API server handles authentication and authorization of incoming requests. It verifies the identity of clients and checks their permissions to ensure they have the necessary privileges to perform the requested actions.

Event Notification: The API server generates events to inform clients about changes in the cluster state. These events can be monitored and acted upon by administrators and controllers to maintain cluster health and respond to changes dynamically.

Hope you got a better understanding of the Kubernetes Architecture in this article. Do let me know your thoughts in comments.

Thanks for reading!

Happy Learning!